Technical SEO is what makes a website work. It makes sure that search engines can crawl, index, and rank your website. As we move into 2024, it’s more important than ever to understand the basics of Technical SEO. This article goes over the basics and best practices of Technical SEO. It covers important topics like website design, optimizing site speed, making sure the site is mobile-friendly, and more. By following the newest methods and trends, you can make your site more visible and improve the user experience. This will help you get more organic traffic and move up in the search engine results.

Basics and Best Practices of SEO in 2024

In 2024, the basics of technical SEO will focus on making sites faster, more mobile-friendly, and safer through HTTPS links. For rich snippets, best practices include using structured data, making sure URLs are clean, and doing full site checks. Putting these aspects at the top of the list improves the site’s performance, user experience, and visibility in search engines.

Crawling and indexing of websites

Crawling and indexing websites are basic ways for search engines to find and store web pages. Bots crawl websites to find new content while crawling stores these pages so that search engines can find them. Efficient crawling and indexing ensure that search engines display your site’s content correctly in search engine results pages (SERPs), thereby enhancing its exposure and accessibility.

The Importance of Indexing

Search engines look at information from crawled web pages and store it in their files. This is called indexing. It helps search engines quickly find pages that are related to what people are looking for. If you index your website correctly, its content will be searchable and available to possible visitors. This will affect how visible and high up in search results your website displays.

Tools for Monitoring Crawling and Indexing

Website owners can keep an eye on how search engines crawl and organize their sites with a number of tools. Bugs, crawling data, and the ranking status can all be seen in tools like Google Search Console. Webmasters can fix problems like crawl errors or pages that aren’t indexed by taking steps to keep an eye on these metrics. This makes the site more visible and improves its success in search engines.

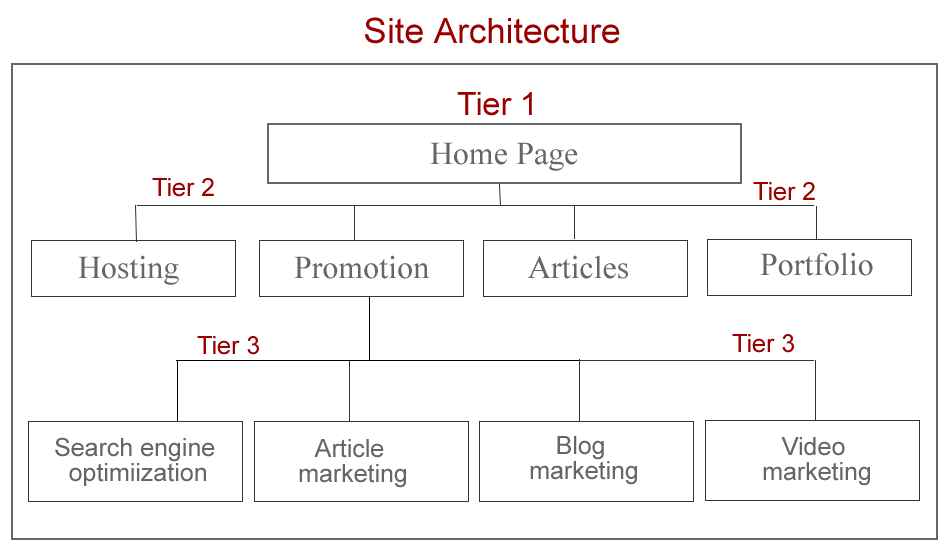

Website Architecture

Website architecture is how the information, navigation, and functions of a website are set up and structured. A layout improves the user experience and makes it easier for search engines to crawl and index, which helps SEO.

Importance of a Clear Website Structure

A website’s layout that is easy to understand makes it easier for both users and search engines to find content. It makes the site’s links more valuable, which is good for SEO. Visitors can quickly find the information they’re looking for when categories and hierarchies are made clear. This lowers the number of “bounces” and increases user involvement.

Optimizing Content Organization

Putting material in a logical and hierarchical order helps both users and search engines figure out what the site is about. By making general collections, putting content that is related to each other into categories and subcategories can help with SEO. This method also works with internal linking strategies, which lead users to related content and make the whole site better for SEO.

Creating an XML Sitemap

An XML sitemap is a file that lists all important pages of a website, helping search engines crawl and index content more efficiently. It checks to see if all pages can be found and are taken into account when people look. Adding a sitemap to your website’s structure makes it easier for people to find and use. This is especially true for bigger sites with complicated structures or lots of content changes.

Site Speed Optimization

The goal of site speed improvement is to make websites load faster and respond faster. People will enjoy using a site more, leave it less often, and it could even move up in the search engine results if it loads faster.

Importance of Fast Loading Times

Users are happier and more engaged when pages load quickly, which is important for lowering bounce rates and increasing sales. Sites get higher rankings from search engines. that load quickly because speed is a ranking factor. To get the fastest site speed, you should optimize images, use browser caching, and keep server reaction times as short as possible.

Tools for Measuring Site Speed

Google PageSpeed Insights and GTmetrix analyze website performance by providing details on loading times and performance measures. These tools help webmasters make changes that work by finding slow spots on a site, like large files or scripts that stop the browser from rendering the page.

Best Practices for Improving Site Speed

Using GZIP and other compression methods, optimizing CSS, JavaScript, and HTML files, and compressing images can all make a big difference in how fast a site loads. Prioritizing content above the fold, running servers more efficiently, and using content delivery networks (CDNs) are some other ways to speed up loading times and improve the user experience.

Mobile-Friendliness

Mobile-friendliness is very important for websites in 2024 because so many people use the internet on their phones. Here, “mobile responsiveness” means how well a website works and looks on phones and computers. A website that is mobile-friendly makes it easy to use, read, and navigate on devices with different screen sizes. Google’s mobile-first indexing gives mobile-friendly sites more weight, so optimizing for mobile makes the user experience better, lowers bounce rates, and boosts SEO results. Key practices include responsive design, quick loading times on mobile networks, simple menus for browsing, and buttons that can be touched. Making sure your site is mobile-friendly not only meets user needs, but it also improves its general performance.

HTTPS and Website Security

In 2024, HTTPS and website security are very important for keeping users’ info safe and building trust. HTTPS, which is shown by a secure padlock in browsers, encrypts data sent between a user’s computer and a website to keep it safe from being read or changed. This security is very important for keeping private data like login information and payment information safe. Using HTTPS also helps SEO because Google ranks safe sites higher in search results. Getting an SSL/TLS certificate, setting up HTTPS redirects, and regularly updating security measures are all things that website owners can do to make sure their sites are safe. Businesses can create a safe online space and gain trust with their audience by prioritizing HTTPS.

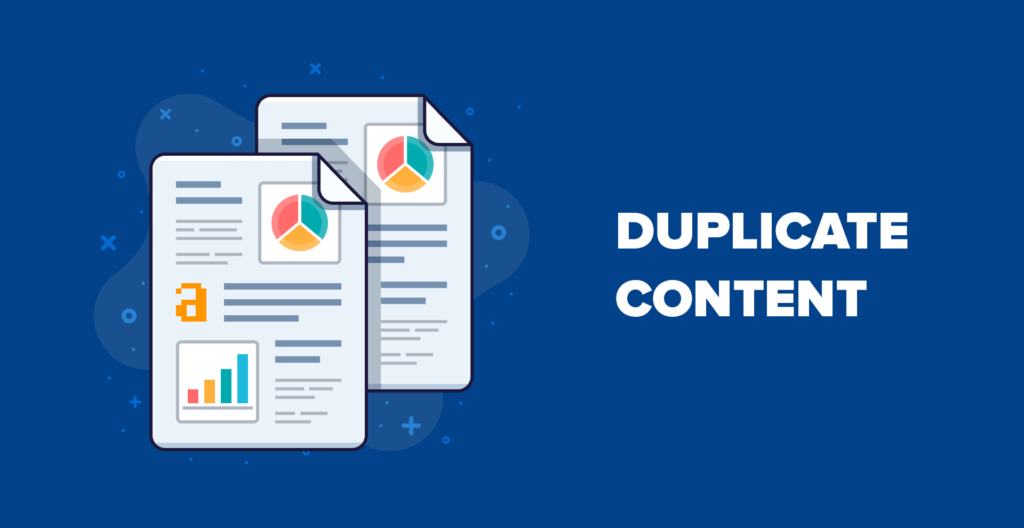

Duplicate Content

When the same or very similar content shows up on several web pages or in different sites, this is called duplicate content. When it comes to SEO, having duplicate content can hurt a site’s rankings because it makes search engines unsure of which version to scan and rank. It can also hurt the SEO worth of the original content and, in the worst cases, get you in trouble. To keep things running smoothly, webmasters should use canonical tags to show which version of the content is chosen, stay away from copying content from other sites, and group together pages that are similar whenever they can. Websites can keep their SEO clean and improve their organic search success by making sure that all of their content is original.

Structured Data and Schema Markup

Code for structured data and styles helps search engines understand what’s on a website better, which makes it more visible and increases the number of clicks it gets. Using a language of tags, schema markup makes it easier for search engines to understand and show data. It lets websites highlight certain pieces of information like reviews, events, goods, and frequently asked questions (FAQs) right in the search results, giving snippets more context. Adding organized data to your site can help your SEO by making it more appealing to both users and search engines. Implementation is made easier by tools like Google’s Structured Data Markup Helper, which makes sure that websites use this powerful SEO technique to improve their online presence.

Handling 404 Errors and Redirects

Taking care of 404 mistakes and redirects is important for keeping the user experience smooth and keeping the SEO value. A 404 error happens when a page can’t be found, which usually means that the content has been moved or removed. Webmasters can fix this by making custom 404 pages that send users back to the homepage or related content. By using 301 redirects, pages that have been changed permanently are sent to the right page, and link value is maintained. Using tools like Google Search Console to check for and fix 404 errors on a regular basis helps keep your site’s identity, keeps users coming back, and stops broken links from affecting your SEO.

Keeping Up with Technical SEO Trends in 2024

To stay competitive in search engine results in 2024, you need to keep up with Technical SEO trends. It’s important to keep up with new trends like AI-driven SEO, Core Web Vitals, and voice search optimization as search engines change. The main goals are to use organized data, make the site faster, and optimize it for mobile-first indexing. Websites stay legal and do well in SERPs by keeping an eye on Google’s algorithm changes and making changes to their strategies as needed. You can stay ahead by joining SEO teams, going to webinars, and following thought leaders in your field. Businesses can improve their internet presence and get more free traffic by using new technologies and following new trends.

Conclusion

Finally, if you want your website to do well in 2024, you need to understand Technical SEO. Businesses can improve user experience and exposure by making their sites faster, mobile-friendly, and optimizing their structure. They can also prioritize security with HTTPS. For businesses to stay competitive, they need to use structured data, handle 404 mistakes correctly, and keep up with new trends like AI-driven SEO. Websites can get better rankings, get more organic traffic, and in conclusion do well in the digital world if they follow these best practices and change with the times when algorithms change. Keeping up with these changes makes sure that websites stay useful and reach their intended audiences.